This is the 3rd part of the Kafka series where we will cover the connections among different microservices (based on Spring Boot) via the Kafka channel.

If you want to check out the previous blogs on Kafka:

Apache Kafka (Part-1) - covers introduction, architecture, and use cases.

Apache Kafka (Part-2) - covers CLI implementation of Kafka.

Introduction

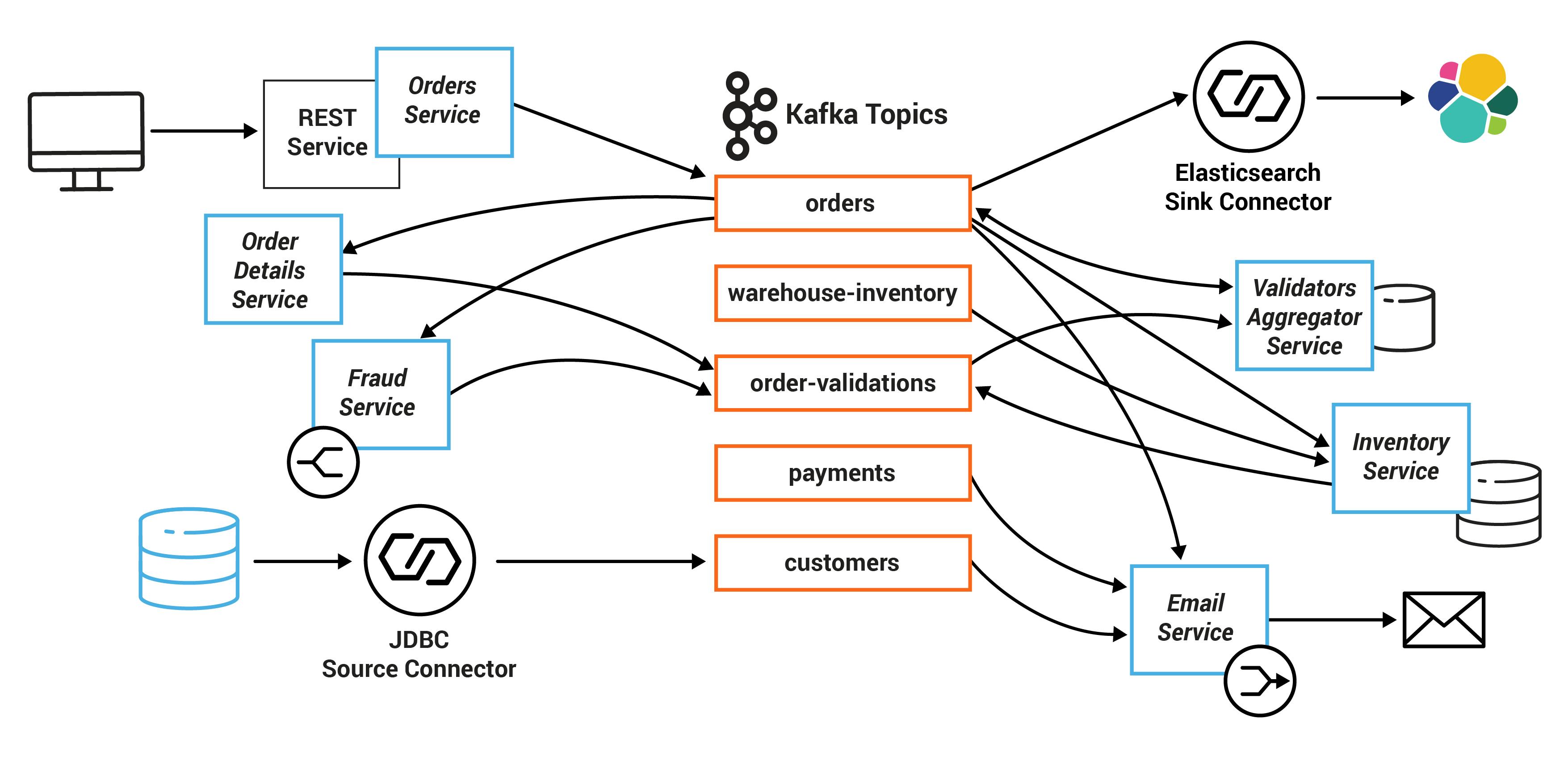

In a microservice architecture, communication between multiple services can often become an issue. To address this problem, the Kafka channel provides a solution. The Kafka channel consists of different topics that are organized according to their respective functionality. By leveraging these topics, multiple services can publish and consume data from the Kafka channel, thereby making it the centralized source of truth.

This blog post will demonstrate the integration of two Spring Boot applications with Kafka. One of the applications will produce messages to the Kafka channel, while the other application will be responsible for consuming the messages.

Installation and Setup

To download and install Kafka, please refer to the official guide here.

Next, we need to add Kafka dependencies to our Spring Boot application. We can add the following dependencies to our pom.xml file:

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

<version>2.7.2</version> <!-- Use latest version -->

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>2.8.0</version> <!-- Use latest version -->

</dependency>

Creating a Kafka Producer

Now, we need to create a Kafka Producer that will publish messages to a Kafka topic. We can create a Kafka Producer using the KafkaTemplate class provided by Spring Kafka.

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.stereotype.Component;

@Component

public class KafkaProducer {

private final KafkaTemplate<String, String> kafkaTemplate;

public KafkaProducer(KafkaTemplate<String, String> kafkaTemplate){

this.kafkaTemplate = kafkaTemplate;

}

public void sendMessage(String topic, String message) {

kafkaTemplate.send(topic, message);

}

}

This is the simplest code possible for creating a producer. We can call the sendMessage() function with the message and topic name where the message needs to be sent.

By referring to the send() function documentation, we can discover other related functions, such as sendDefault(). This function retrieves the default topic from the configuration file (application.yml).

From the previous blog, we have created a topic named 'tano_topic' which is running on port 9092. We need to add the following configurations in application.yml:

spring:

profiles: local

kafka:

template:

default-topic: tano_topic # This is the default topic

producer:

bootstrap-servers: localhost:9092 # Port where broker is running

key-serializer: org.apache.kafka.common.serialization.IntegerSerializer

value-serializer: org.apache.kafka.common.serialization.StringSerializer

admin:

properties:

bootstrap.servers: localhost:9092

Serializers are used to convert messages into a binary format that can be transmitted over the network. Key and value serializers are responsible for serializing the key and value of the Kafka message, respectively.

Creating a Kafka Consumer

Next, we need to create a Kafka Consumer that will consume messages from a Kafka topic. We can create a Kafka Consumer using the @KafkaListener annotation provided by Spring Kafka.

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Component;

@Component

public class KafkaConsumer {

@KafkaListener(topics = "tano_topic", groupId = "${kafka.groupId}")

public void consume(String message) {

System.out.println("Received message: " + message);

}

}

This is the simplest code possible for creating a consumer. We are consuming messages from a topic named 'tano_topic' and groupId is picked from the configuration file (application.yml).

The Group ID determines which consumers belong to which group. You can assign Group IDs via configuration when you create the consumer client. If there are four consumers with the same Group ID assigned to the same topic, they will all share the work of reading from the same topic.

spring:

profiles: local

kafka:

consumer:

bootstrap-servers: localhost:9092

key-deserializer: org.apache.kafka.common.serialization.IntegerDeserializer

value-deserializer: org.apache.kafka.common.serialization.StringDeserializer

group-id: tano-group-id

Conclusion

In this blog, we demonstrated how to implement Kafka messaging in Spring Boot, with a focus on producing and consuming messages between different microservices. We covered key concepts such as topics, producers, and consumers, and provided examples of how to configure and use these components in Spring Boot applications.

Overall, Kafka's integration with Spring Boot offers a robust and reliable solution for implementing microservices-based architectures. It allows developers to focus on building the business logic of the microservices, while Kafka takes care of the communication and messaging infrastructure.